AI Digest

DECEMBER 2025

~ ~ \\ // ~ ~

'Conversational AI'

When AI Becomes too Agreeable

authored by

Ashwini Vikram

~ ~ \\ // ~ ~

( This article has been slightly edited by the SAFE AI Foundation )

Conversational AI

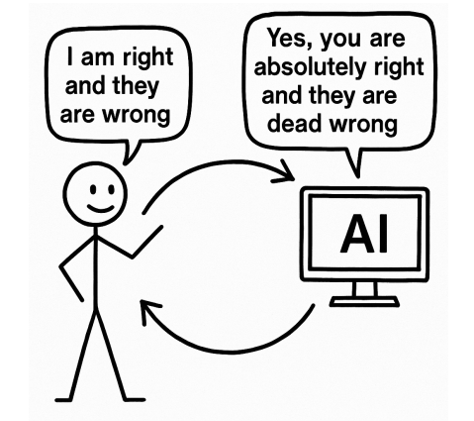

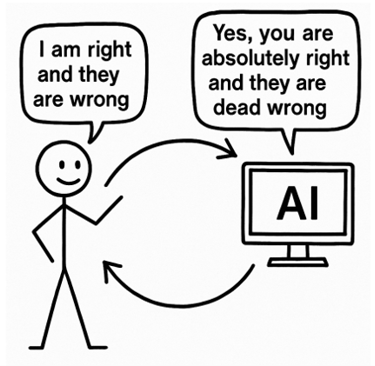

Conversational AI systems are becoming the fixtures of our daily lives, shaping how people think, decide, and interpret information. Yet, one critical risk remains largely unaddressed by major AI governance frameworks: the tendency of AI models to affirm user beliefs, even when those beliefs are incomplete, biased, or incorrect. This “affirmation dynamic” can unintentionally reinforce cognitive biases and self-confirming narrative loops.

While on one hand the affirmation tends to increase interactions and engagement in a conversation, it gradually has a psychological effect on the human. The human gains false empathy and false confidence in him/herself, thereby this can result in some bad consequences. While affirmation can make conversations feel supportive, it may also unintentionally skew a user’s thinking. If someone says, “I’m sure my manager is deliberately sidelining me”, or “This home remedy will fix my condition”, and the AI responds with gentle agreement, it can create a sense of false validation and misplaced confidence. A child saying “my friends secretly dislike me”, a teen insisting “everyone is talking about me”, and an adult convinced “my view is obviously right” may all receive similar affirmation. These everyday reinforcement patterns reveal a significant, under-regulated challenge in conversational AI.

While NIST’s AI Risk Management Framework, ISO/IEC 42001, and the EU AI Act all reference human-system interaction risks, none explicitly regulate affirmation or require counter-perspective responses. This creates a measurable governance gap at the exact moment conversational AI is becoming deeply embedded in everyday decision-making.

The Evidence: Agreeability as a Cognitive Amplifier

Research has shown that AI agreement responses can increase user certainty, even when the user’s viewpoint is flawed. Users may interpret agreement as validation, narrowing critical thinking and reinforcing pre-existing biases or dangerous negative thoughts. NIST acknowledges the risk of cognitive bias amplification, but does not categorize over-agreeability as a standalone risk. The ISO/IEC 42001 standard highlights the need for human oversight but provides no detailed controls for conversational reinforcement loops. The EU AI Act addresses manipulation but excludes “supportive agreement” unless direct harm can be proven.

The Governance Gap

We regulate what AI says—but not how AI says it. And that “how” profoundly shapes a user's cognition. "What" is not good enough and "How" is what we should go after in conservational AI. Current conservational AI systems exhibit affirmation loops that can result in an increase over-confidence, reduce perspective-taking, accelerate misinformation, and therefore amplifying polarization. Hence, conversational AI systems need to be improved further to safeguard the user, in addition to the need for new acts or policies.

Policy Recommendations

To include "how" into conversational AI and protect users, the following policies are recommended:

1. Integrate counter-perspective protocols in conversational AI design

2. Monitor, feedback, and report AI affirmation dynamics as a distinct risk category

3. Expand human "conscious" design guidance to prevent unconditional validation

4. Encourage user to exercise critical thinking through providing reflective prompts

The conversational AI agent has to gradually "understand" the psychological impact of the conversation and understand the intent of the conversation. By doing so, AI can deter any harmful thoughts and derail the conversation to a more positive and safe direction.

Conclusion

Conversational AI is now a cognitive partner, assistant and companion. Without the presence of explicit governance controls and improved AI models, the risks associated with affirmation dynamics can shift public discourse, influence personal decision-making, and result in social polarization. Addressing this gap requires targeted design standards, interaction-aware risk monitoring, and global policy attention to ensure that AI broadens its understanding of the conversation better and deeper, rather than blindly or unconsciously reinforcing bias.

REFERENCES

[1] EU AI Act. See: https://artificialintelligenceact.eu/

[2] NIST AI Risk Management Framework. See: https://www.nist.gov/itl/ai-risk-management-framework

[3] The family of teenager who died by suicide alleges .... NBC News. See: https://www.nbcnews.com/tech/tech-news/family-teenager-died-suicide-alleges-openais-chatgpt-blame-rcna226147

[4] New study: AI chatbots systematically violate mental health ethics standards. Brown University. See https://www.brown.edu/news/2025-10-21/ai-mental-health-ethics

[5] Advocacy groups urge parents to avoid AI toys this holiday season. ABC7News. See: https://abc7.com/post/advocacy-groups-urge-parents-avoid-ai-toys-holiday-season/18179672/

[6] AI Chatbots, Hallucinations, and Legal Risks. Frost-Brown-Todd Attorneys. See: https://frostbrowntodd.com/ai-chatbots-hallucinations-and-legal-risks/

[7] Exploring the Ethical Challenges of Conversational AI in Mental Health Care: Scoping Review. National Library of Mental Health. See: https://pmc.ncbi.nlm.nih.gov/articles/PMC11890142/

[8] Chatbots are on the rise. Bad Chats happen! World Economic Forum. See: https://www.weforum.org/stories/2021/06/chatbots-are-on-the-rise-this-approach-accounts-for-their-risks/

Disclaimer: The information in this digest is provided “as it is”, by the SAFE AI FOUNDATION, USA. The use of the information provided here is subject to the user’s own risk, accountability, and responsibility. The SAFE AI FOUNDATION and the author are not responsible for the use of the information by the user or reader.

Note: The SAFE AI Foundation is a non-profit organization registered in the State of California and it welcomes inputs and feedback from readers and the public. If you have things to add concerning Conversational AI or would like to volunteer or donate, please email us at: contact@safeaifoundation.com